Reinforcement Learning for Frequency Control in Superconducting RF Particle Accelerators

A comprehensive overview of global research initiatives applying AI to achieve autonomous, stable, and efficient accelerator systems

Real-Time Implementation

KIT's KINGFISHER system achieves microsecond-level latency for online RL training in live accelerator environments

Zero-Shot Transfer

CAS demonstrates successful simulation-to-reality transfer with TBSAC algorithm for RFQ optimization

Global Collaboration

RL4AA initiative connects 15+ institutions worldwide advancing autonomous accelerator systems

Introduction to RL in SRF Control

The application of Reinforcement Learning (RL) to the control of superconducting radiofrequency (SRF) systems in particle accelerators represents a paradigm shift from traditional model-based control to autonomous, adaptive systems. Research institutions worldwide are actively exploring RL to address complex beam dynamics challenges that have traditionally resisted conventional control approaches.

Key Insight

RL enables controllers to learn optimal strategies directly from interaction with the accelerator environment, without requiring complete and accurate a-priori models of complex, non-linear systems.

These efforts span from stabilizing beam orbits and optimizing injector performance to controlling high-dimensional systems like superconducting linear accelerators. The research demonstrates a clear trend toward creating more autonomous, stable, and efficient accelerator systems through AI integration.

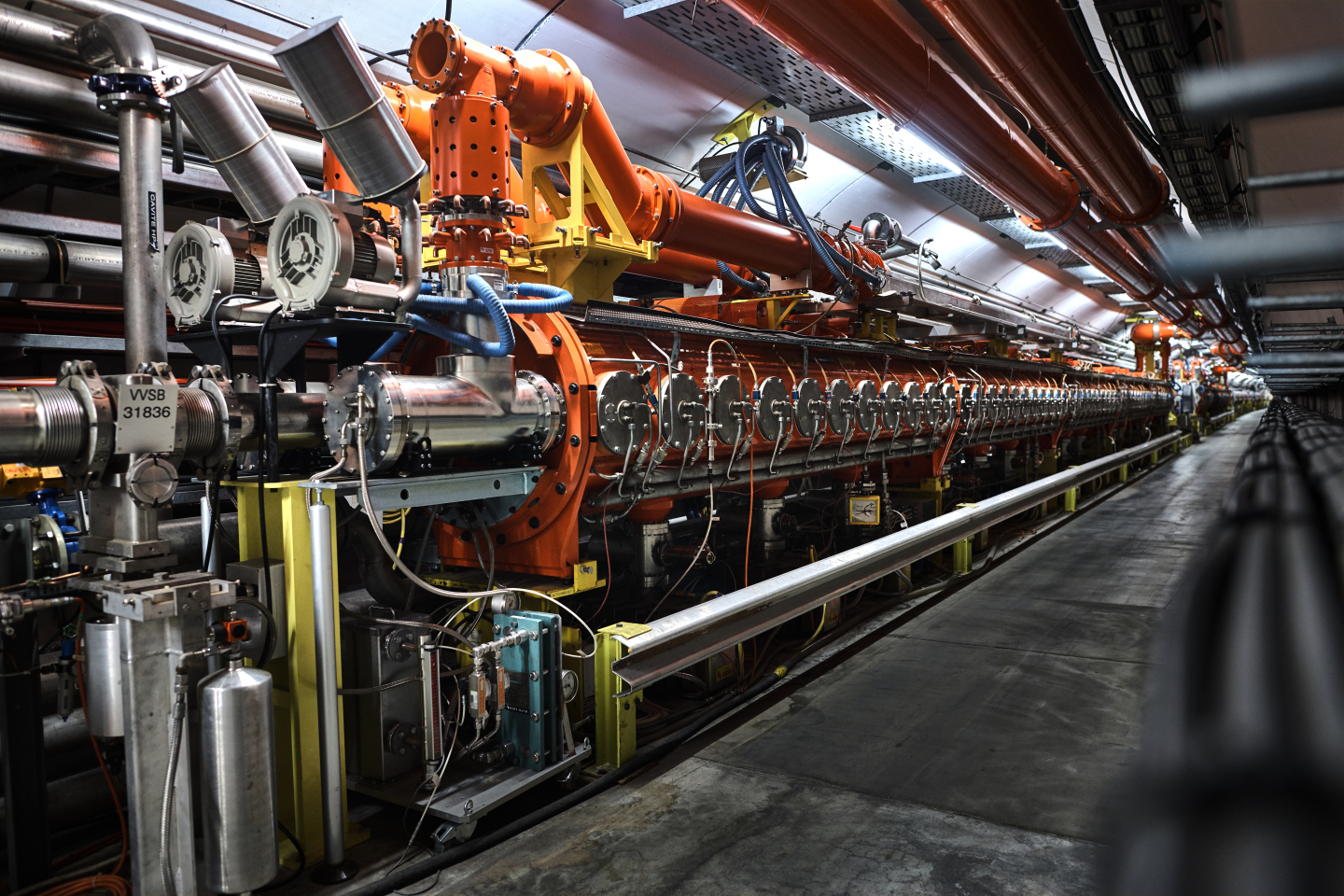

Karlsruhe Institute of Technology: Real-Time Control at KARA

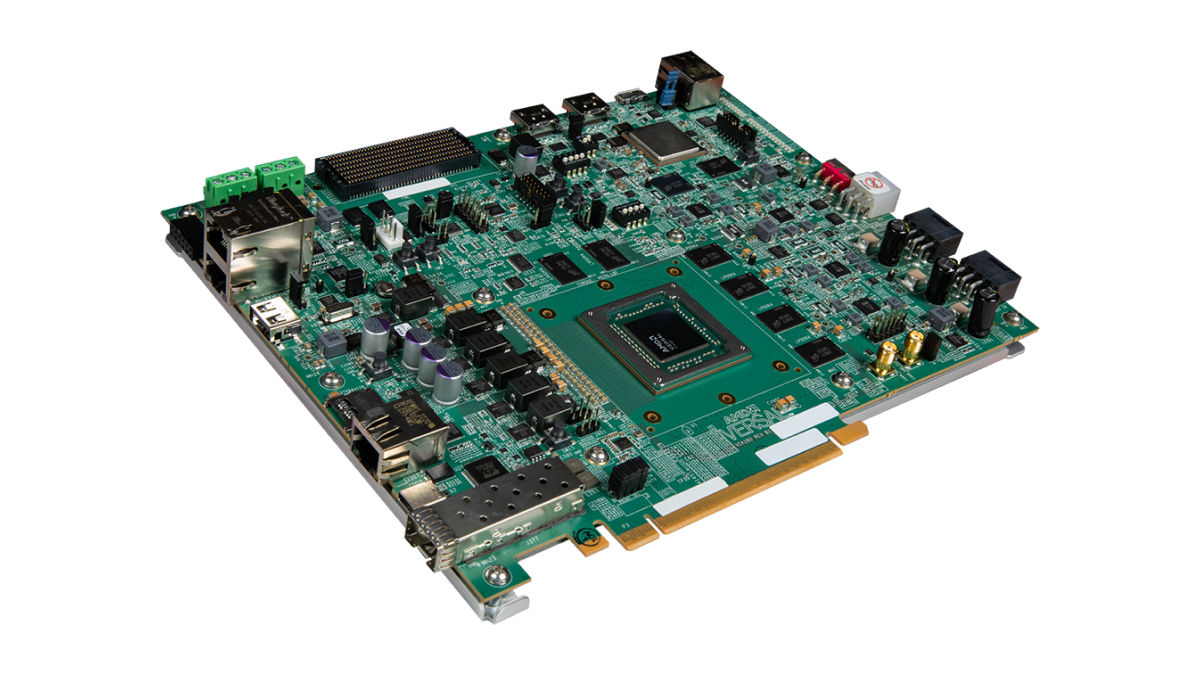

KINGFISHER System Architecture

The KIT team developed the KINGFISHER system (Karat Intelligent Next-Generation Feedback Implementation System High-speed and Enhanced Response) to meet stringent real-time constraints for controlling microbunching instability (MBI) at the Karlsruhe Research Accelerator (KARA).

Technical Specifications

- • AMD-Xilinx Versal heterogeneous computing platform

- • Microsecond-level latency execution

- • Online learning through "experience accumulator"

- • Direct RF system modulation capability

The RL agent at KARA controls microbunching instability by modulating the accelerator's RF system, with two primary approaches: using the kicker cavity of the existing bunch-by-bunch feedback system or directly modulating the accelerating cavities of the main RF system. This represents the first experimental attempt to control MBI using RL with online training [725].

Chinese Academy of Sciences: TBSAC Algorithm

Trend-Based Soft Actor-Critic (TBSAC)

The CAS team developed TBSAC, a novel RL algorithm specifically tailored for robust control of particle beams in superconducting linear accelerators. The algorithm incorporates temporal information (trends of beam position monitor readings) directly into the agent's observation space.

Algorithm Components

- • Soft Actor-Critic (SAC) framework

- • Trend-based observation space

- • Entropy maximization for exploration

- • Off-policy learning stability

Performance Achievements

- • RFQ transmission efficiency >85%

- • Optimization time: 2 minutes

- • Zero-shot transfer capability

- • Robust to noise and non-linearities

Breakthrough Achievement

The TBSAC agent successfully achieved "zero-shot" transfer from simulation to real accelerator, eliminating the need for time-consuming and potentially disruptive online learning on operational machines [506].

Helmholtz-Zentrum Berlin & University of Kassel: SRF Gun Optimization

Deep Reinforcement Learning for SRF Photoelectron Injectors

A collaboration between HZB and the University of Kassel has developed a Deep Reinforcement Learning (DRL) system for optimizing superconducting RF photoelectron injectors (SRF guns), which are critical for generating high-brightness electron beams.

The DRL agent learns to control the SRF gun by interacting with a high-fidelity simulation, exploring a vast parameter space including RF phase and amplitude, laser timing, and cathode conditions. The objective is to maximize beam quality metrics such as minimizing transverse emittance and maximizing bunch charge [798].

Optimization Goals

- • Minimize beam transverse emittance

- • Maximize bunch charge

- • Optimize RF phase/amplitude

- • Control laser parameters

- • Manage cathode conditions

Simulation-Based Training Approach

The research relies on a high-fidelity "digital twin" simulation that models the entire process of electron beam generation, including photoemission, RF field interaction, space charge effects, and beam transport. This simulation-based training methodology allows the DRL agent to undergo millions of training episodes safely and efficiently [802].

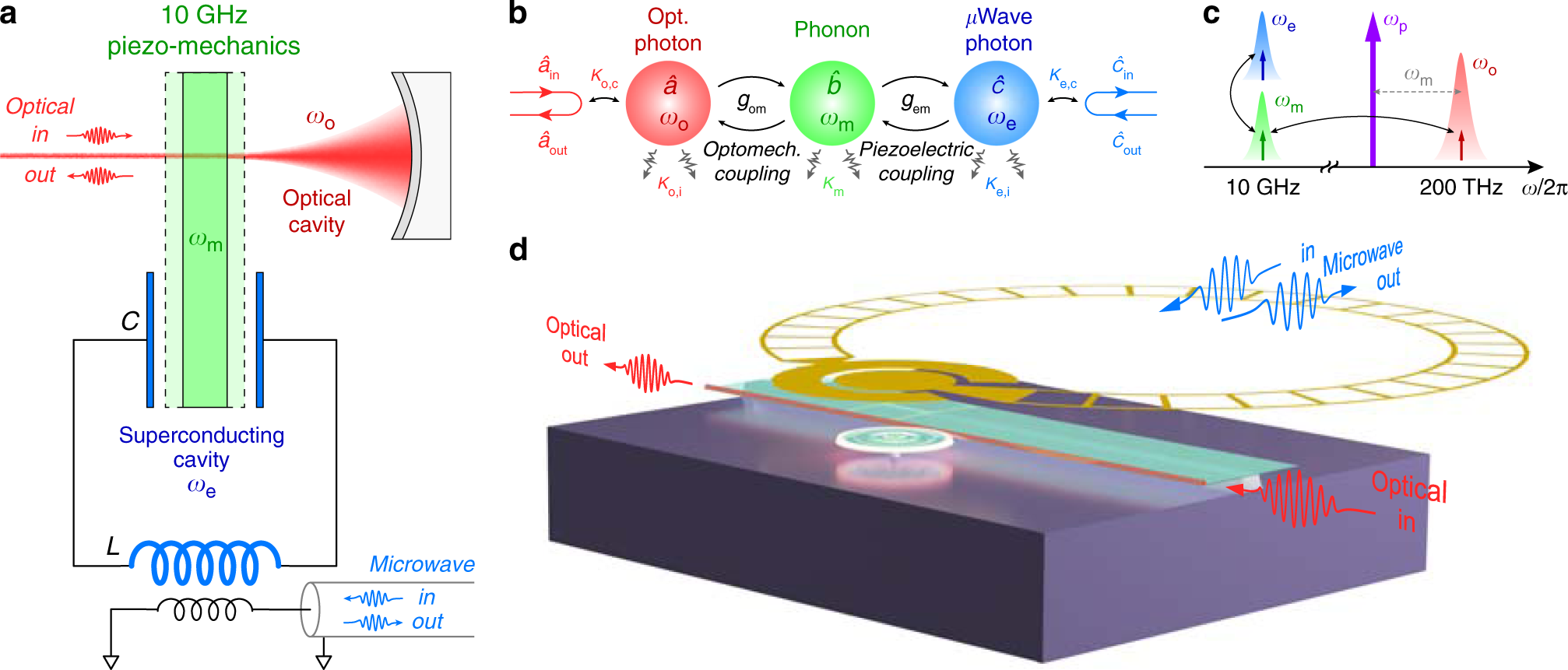

University of New Mexico & SLAC: Neural Networks for Resonance Control

LCLS-II Application

The collaborative research between UNM and SLAC is directly motivated by the operational requirements of the LCLS-II project, which features SRF cavities operating with extremely high loaded quality factors (Q_L) and bandwidths as narrow as 10 Hz.

Technical Challenges

- • Microphonics-induced detuning

- • Lorentz force detuning compensation

- • 10 Hz bandwidth requirements

- • Real-time adaptive control

The ML-based system utilizes piezoelectric actuators for adaptive tuning of cavity frequency, with the controller learning to distinguish between microphonics and Lorentz force detuning signatures. The research demonstrates the potential for improved resonance control compared to traditional fixed-parameter controllers [41].

CERN: RL4AA Collaboration and Autonomous LLRF Systems

Reinforcement Learning for Autonomous Accelerators (RL4AA)

The RL4AA collaboration brings together researchers from CERN, DESY, KIT, and other institutions to advance the application of Reinforcement Learning in accelerator physics. The collaboration focuses on developing high-fidelity simulations, addressing transfer learning challenges, and creating robust RL algorithms for safety-critical applications [306].

Research Focus Areas

- • Detuning control optimization

- • Power consumption reduction

- • Predictive disturbance compensation

- • Adaptive feedback systems

Collaborative Activities

- • RL4AA'23 workshop at KIT

- • Knowledge sharing platforms

- • Joint algorithm development

- • Benchmarking and validation

Fermilab: AI Applications in Accelerator Systems

FPGA Implementation of Neural Networks

Fermilab researchers developed a neural network trained via Reinforcement Learning to regulate the Gradient Magnet Power Supply (GMPS) at the Fermilab Booster. The breakthrough achievement was compiling the trained neural network to execute on a Field-Programmable Gate Array (FPGA) for real-time control with predictable latency [393].

Key Innovation

First machine-learning-based control algorithm implemented on FPGA for controls at the Fermilab accelerator complex, enabling real-time AI control with microsecond-scale response times.

Iterative Learning Control for SRF Cavities

Fermilab has pioneered the application of Iterative Learning Control (ILC) for compensating transient beam loading in high-current SRF accelerators. The modified ILC algorithm, designed for FPGA implementation, successfully suppresses beam-induced gradient fluctuations [821].

Implementation Features

- • Rectangle-pulse beam profile approximation

- • Reduced computational complexity

- • Real-time FPGA execution

- • Experimental validation at CAFe facility

Global Research Landscape and Future Directions

Leading Institutions

- KIT (Germany) - Real-time RL implementation

- CAS (China) - TBSAC algorithm development

- CERN (Switzerland) - RL4AA coordination

- Fermilab (USA) - FPGA-based AI control

- SLAC (USA) - LCLS-II applications

- HZB (Germany) - SRF gun optimization

Technical Approaches

- Deep RL - High-dimensional control

- Zero-shot transfer - Simulation to reality

- Hardware acceleration - FPGA implementation

- Digital twins - Simulation-based training

- Adaptive feedback - Real-time tuning

Future Applications

- Fusion energy devices - Plasma control

- Medical accelerators - Treatment optimization

- Industrial applications - Process control

- Next-gen colliders - Autonomous operation

Emerging Research Directions

Technical Challenges

- • Real-time hardware constraints

- • Safety-critical system validation

- • Transfer learning robustness

- • High-fidelity simulation accuracy

Research Opportunities

- • Multi-agent RL systems

- • Federated learning approaches

- • Explainable AI for physics insight

- • Cross-institutional benchmarking

Global Impact

The convergence of AI and accelerator physics through initiatives like RL4AA is paving the way for a new generation of autonomous scientific facilities that will enable discoveries impossible with current technology, while fostering international collaboration in fundamental research.